Vue lecture

Major Chinese funder to stop paying fees for 30 pricey open-access journals

Allegations of a Chinese nuclear blast may reignite weapons testing

Wild Chimpanzees May Be Consuming Two Alcoholic Drinks a Day

A banner year for military space funding— with an unclear path beyond

Reconciliation boost lifts 2026 totals, but sustainability questions loom for missile defense and Space Development Agency

The post A banner year for military space funding— with an unclear path beyond appeared first on SpaceNews.

A 3D-Printed Elephant Inside a Living Cell Signals a Bioengineering Breakthrough

A Chilling Massacre in Prehistoric Serbia Took the Lives of Women and Children

A Baby Macaque Has Gone Viral with His Plushie — What Punch Tells Us About Social Hierarchies

Octopuses Pick Up On Invisible Microbial Cues to Avoid Rotting Food

Visible light paints patterns onto chiral antiferromagnets

Researchers at Los Alamos National Laboratory in New Mexico, US have used visible light to both image and manipulate the domains of a chiral antiferromagnet (AFM). By “painting” complex patterns onto samples of cobalt niobium sulfite (Co1/3NbS2), they demonstrated that it is possible to control AFM domain formation and dynamics, boosting prospects for data storage devices based on antiferromagnetic materials rather than the ferromagnetic ones commonly used today.

In antiferromagnetic materials, the spins of neighbouring atoms in the material’s lattice are opposed to each other (they are antiparallel). For this reason, they do not exhibit a net magnetization in the absence of a magnetic field. This characteristic makes them largely immune to disturbances from external magnetic fields, but it also makes them all but invisible to simple electrical and optical probes, and extremely difficult to manipulate.

A special structure

In the new work, a Los Alamos team led by Scott Crooker focused on Co1/3NbS2 because of its topological nature. In this material, layers of cobalt atoms are positioned, or intercalated, between monolayers of niobium disulfide, creating 2D triangular lattices with ABAB stacking. The spins of these cobalt atoms point either toward or away from the centers of the tetrahedra formed by the atoms. The result is a noncoplanar spin ordering that produces a chiral, or “handed,” spin texture.

This chirality affects the motion of electrons in the material because when an electron passes through a chiral pattern of spins, it picks up a geometrical phase known as a Berry phase. This makes it move as if it were “seeing” a region with a real magnetic field, giving the material a nonzero Hall conductivity which, in turn, affects how it absorbs circularly polarized light.

Characterizing a topological antiferromagnet

To characterize this behaviour, the researchers used an optical technique called magnetic circular dichroism (MCD) that measures the difference in absorption between left and right circularly polarized light and depends explicitly on the Hall conductivity.

Similar to the MCD that is measured in well-known ferromagnets such as iron or nickel, the amplitude and sign of the MCD measured in Co1/3NbS2 varied as a function of the wavelength of the light. This dependence occurs because light prompts optical transitions between filled and empty energy bands. “In more complex materials like this, there is a whole spaghetti of bands, and one needs to consider all of them,” Crooker explains. “Precisely which mix of transitions are being excited depends of course on the photon energy, and this mix changes with energy. Sometimes the net response is positive, sometimes negative; it just depends on the details of the band structure.”

To understand the mix of transitions taking place, as well as the topological character of those transitions, scientists use the concept of Berry curvature, which is the momentum-space version of the magnetic field-like effect described earlier. If the accumulated Berry phase is positive (negative), then the electron is moving in a right-handed (left-handed) spin texture chirality, which is captured by the Berry curvature of the band structure in momentum space.

Imaging and painting chiral AFM domains

To image directly the domains with positive and negative chirality, the researchers cooled the sample below its ordering temperature, shined light of a particular wavelength onto it, and measured its MCD using a scanning MCD microscope. The sign of the measured MCD value revealed the chirality of the AFM domains.

To “write” a different chirality into these AFM domains, the researchers again cooled the sample below its ordering temperature, this time in the presence of a small positive magnetic field B, which fixed the sample in a positive chiral AFM state. They then reversed the polarity of B and illuminated a spot of the sample to heat it above the ordering temperature. Once the spot cooled down, the negative-polarity B-field changed the AFM state in the illuminated region into a negative chirality. When the “painting” was finished, the researchers imaged the patterns with the MCD microscope.

In the past, a similar thermo-magnetic scheme gave rise to ferromagnetic-based data storage disks. This work, which is published in Physical Review Letters, marks the first time that light has been used to manipulate AFM chiral domains – a fundamental requirement for developing AFM-based information storage technology and spintronics. In the future, Crooker says the group plans to extend this technique to characterize other complex antiferromagnets with nontrivial magnetic configurations, use light to “write” interesting spatial patterns of chiral domains (patterns of Berry phase), and see how this influences electrical transport.

The post Visible light paints patterns onto chiral antiferromagnets appeared first on Physics World.

The Last Mystery of Antarctica’s ‘Blood Falls’ Has Finally Been Solved

Lockheed Martin presses case that GPS upgrade will counter jamming threats

GPS IIIF features, including regional spot beams, will ‘change the calculus’ for adversaries

The post Lockheed Martin presses case that GPS upgrade will counter jamming threats appeared first on SpaceNews.

The Commercial Space Federation Releases New White Paper “Perfecting Public-Private Partnerships”

February 24, 2026 – Washington, DC – The Commercial Space Federation (CSF) is proud to announce the release of a new paper titled “Perfecting Public-Private Partnerships: The Future of Government […]

The post The Commercial Space Federation Releases New White Paper “Perfecting Public-Private Partnerships” appeared first on SpaceNews.

Could dewdrops explain why plants are flowering earlier?

At Colorado space firms, Hegseth casts Pentagon bureaucracy as the enemy

Hegseth contrasts “builders” with Beltway primes as administration leans into procurement overhaul

The post At Colorado space firms, Hegseth casts Pentagon bureaucracy as the enemy appeared first on SpaceNews.

LambdaVision books space on Starlab commercial space station

LambdaVision, a company that has used microgravity experiments on the International Space Station to develop an artificial retina, has booked space on a planned commercial successor.

The post LambdaVision books space on Starlab commercial space station appeared first on SpaceNews.

Sophia Space claims $10 million in seed round

SAN FRANCISCO – Sophia Space raised $10 million in seed funding to accelerate development of space-based edge computers and orbital data centers. “The $10 million round is a validation that we are progressing from slideware to hardware,” Rob DeMillo, Sophia Space CEO and co-founder, told SpaceNews. “And it’s evidence that capital is shifting towards orbital-compute […]

The post Sophia Space claims $10 million in seed round appeared first on SpaceNews.

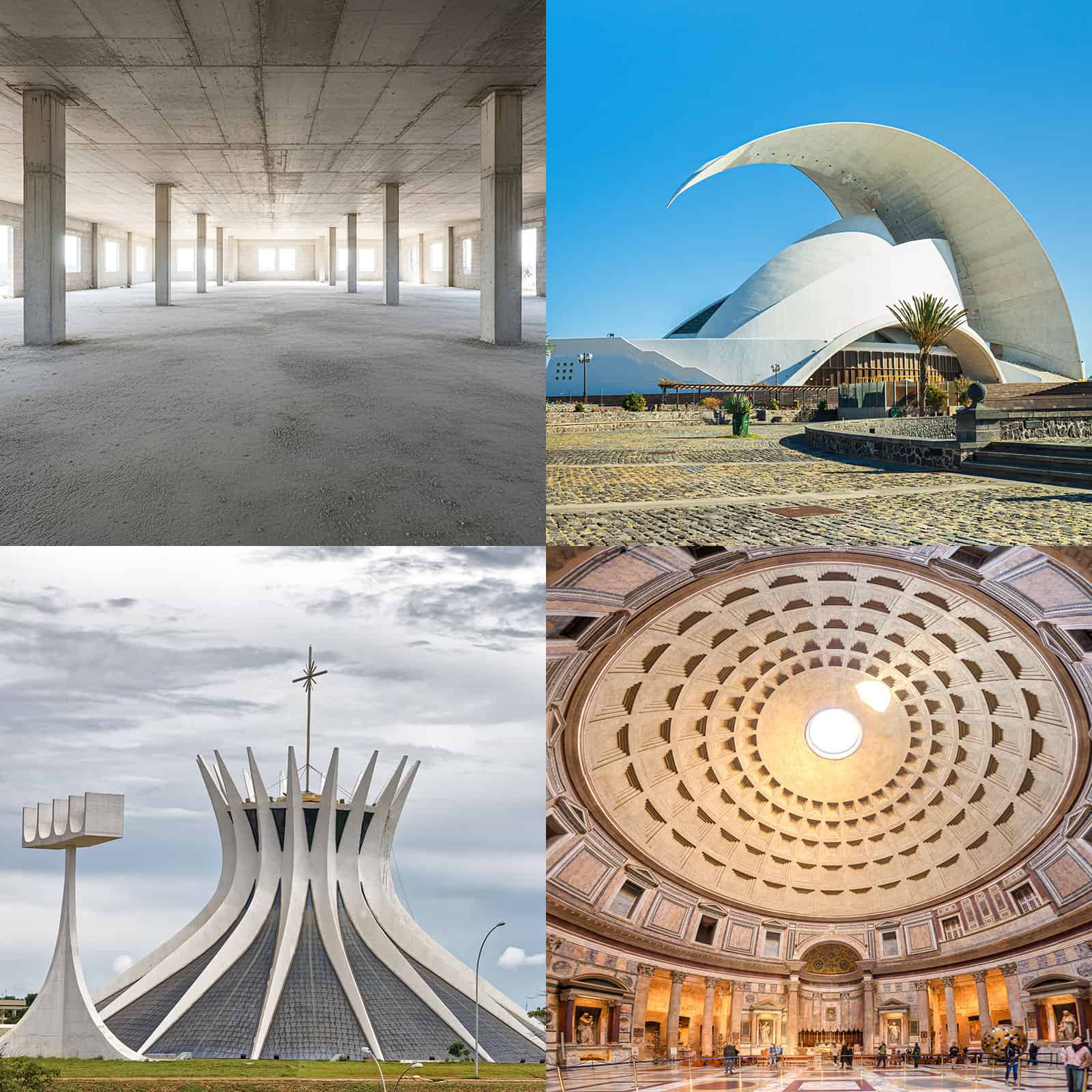

Green concrete: paving the way for sustainable structures

Grey, ugly, dull. Concrete is not the most exciting material in the world. That is, until you start to think about its impact on our lives. Concrete is the second most consumed material on the planet after water. Humanity uses about 30 billion tonnes of the stuff every year, the equivalent of building an entire new New York City every month. Put another way, there is so much concrete in the world and so much being made that by the 2040s it will outweigh all living matter.

As the son of a builder, I have made a few concrete mixes over the years myself, usually following my father’s tried and trusted recipe. Take one part cement (fine mineral powder), two parts sand, and four parts aggregate (crushed stone), then mix and add enough water until it all goes gloopy.

The ubiquity and low cost of these simple ingredients are just two of the reasons for concrete’s global reach. In liquid form, it can be moulded into almost any shape, and once set, it is as hard and durable as stone. What’s more, it doesn’t burn, rot or get eaten by animals.

These factors make concrete the ideal material for everything from vast imposing dams to sleek kitchen floors. However, its gargantuan presence across society comes at an equally epic environmental cost. If concrete were a country, it would rank third behind only the US and China as a greenhouse gas emitter.

Though raw material processing and transport of concrete are part of the problem, concrete’s biggest environmental impact comes from the heat and chemical processes involved in producing cement. Ordinary cement clinker (the raw form of cement before it is ground to a powder) is the product of heating limestone up to 1450 °C until it breaks apart into lime and carbon dioxide (CO2). This heating requires lots of energy and the chemical process releases huge amounts of the greenhouse gas CO2 – meaning that cement makes up around 90% of the carbon footprint of an average concrete mix.

In the UK and some other parts of the world, this climate impact is well recognized, with the industry having made significant efforts to decarbonize over the last few decades. “Since 1990, the UK concrete industry has decreased its direct and indirect environmental impacts by over 53% through various technology levers,” says Elaine Toogood – an architect and senior director at the Mineral Products Association’s Concrete Centre, the UK’s technical hub for all things concrete.

This reduction has been achieved through actions such as fuel switching, decarbonizing electricity and transport networks, and carbon capture technology. “For example, over 50% of all the heat that’s needed to make cement is now supplied by waste-derived fuels,” Toogood adds.

Yet the sheer scale of the global concrete industry means that much more needs to be done to fully mitigate concrete’s carbon impact. Can physics, and more specifically AI, lend a hand?

Low-carbon replacements

Replacing cement – concrete’s least green ingredient – with low-carbon alternatives seems like a good place to start. Two well-proven options have been available for decades.

Fly ash – the by-product of burning coal at power plants – can replace about 30% of cement in concrete mixes. It has been used in the construction of many prominent structures including the Channel Tunnel, which opened in 1994. Blast furnace slag – the by-product of iron and steel production – is another capable replacement, and can make up to 70% of cement content. Slag was used in 2009 to substitute half of the regular cement in the precast concrete units that now make up the sea defences on Blackpool beach.

Yet although these waste materials are currently extensively used as cement or concrete additions in the UK and elsewhere, they rely on very polluting sources (coal-fired power plants and blast furnaces) that are gradually being phased out globally to meet climate targets. As a result, fly ash and blast furnace slag are not long-term solutions. New low-carbon materials are needed, which is where physics can play a decisive role.

Based in Debre Tabor University in Ethiopia, Gashaw Abebaw Adanu is an expert in innovative construction materials. In 2021 he and colleagues investigated the potential of partially replacing (0%, 5%, 10%, 15% and 20%) standard cement with ash from burning lowland Ethiopian bamboo leaf, a common local construction waste material (Adv. Civ. Eng 10.1155/2021/6468444). The findings were encouraging. Though the concrete took longer to set with increased bamboo leaf ash content, the material’s strength, water absorption and sulphate attack (concrete breakdown caused by sulphate ions reacting with the hardened cement paste) improved for 5–10% bamboo leaf ash mixes. The results suggest that up to 10% of cement could be swapped for this local low-carbon alternative.

Steel, copper – or hair?

More recently, Adanu has turned his focus to concrete fibre reinforcement. Adding small amounts of steel, copper or polyethene fibres is known to increase concrete’s ductility and crack resistance by up to 200% and 90%, respectively. The tiny fibres act like micro-stitches throughout the entire mix, transforming concrete from a brittle material into a tough, energy-absorbing composite.

Fibre reinforcement also leads to major cost savings and a reduced carbon footprint, primarily by removing the need for traditional steel rebar and mesh, where 50 kg of steel fibres can often do the work of 100 kg+ of traditional rebar. Eliminating this expensive material also reduces labour and maintenance costs.

In his latest research, Adanu has explored an unexpected alternative fibre reinforcement material that would decrease costs further as it would otherwise go to landfill: human hair (Eng. Res. Express 7 015115). Adanu took waste hair from barbershops in Debre Tabor (with permission, of course), and added small amounts of it in different quantities to standard concrete mixes. “It’s not biodegradable, it’s not compostable, but as a fibre reinforcement material, we found that using 1–2% human hair improves the concrete’s tensile strength, compressive strength, cracking resistance and reduces shrinkage,” says Adanu. “It makes concrete more clean and sustainable, and because it improves the quality of the concrete, it reduces cost at the same time.”

Research like Adanu’s, involving experimentation with local materials, has been the driving force for innovation in construction for millennia. From the ancient Neolithic practice of boosting mudbricks’ strength by adding local straw, to the Romans using volcanic dust as high-quality cement for concrete constructions like the Pantheon in Rome – a structure that still stands to this day, with its 43.3-m diameter non-reinforced concrete dome remaining the largest in the world. But testing one material at a time is no longer the only way.

Taking a more modern, wide-ranging approach, a team of researchers led by Soroush Mahjoubi and Elsa Olivetti of Massachusetts Institute of Technology (MIT), recently mined the cement and concrete literature, and a database of over one million rock samples, looking for cement ingredient substitutes (Communications Materials 6 99). The study not only confirmed the potential of the well-known alternatives fly ash and metallurgic slags, but also various biomass ashes like the bamboo leaf ash Adanu investigated, as well as rice husk, sugarcane bagasse, wood, tree bark and palm oil fuel ashes.

The meta-review in addition identified various other waste materials with high potential. These include construction and demolition wastes (ceramics, bricks, concrete), waste glass, municipal solid waste incineration ashes, and mine tailings (iron ore, copper, zinc), as well as 25 igneous rock types that could significantly reduce cement’s carbon impact.

AI to the rescue

Although a number of these alternative concrete materials have been known for some time, they have struggled to make an impact, with very few being used to partially replace regular cement in ready-mix concretes. Getting construction companies or concrete contractors to give them a try is no simple task.

“Concrete contractors are used to using certain mixes for certain jobs at certain times of the year, so they can plan a site and project based on how those materials are going to behave,” says Toogood. “Newer mixes act slightly differently when fresh,” she adds, which makes life tricky for those running a construction site, where concrete that behaves in a predictable manner is critical so that things run smoothly and efficiently.

Two physicists – Raphael Scheps and Gideon Farrell – aim to build this trust in low-carbon alternatives through their UK construction technology company Converge. Starting out using sensors to measure the real-time performance of different mixes of concrete in situ, they have built one of the world’s largest datasets on the performance of concrete.

They can now apply an AI model underpinned by physics principles. The program simulates the physical and chemical interactions of different components to predict the performance of a vast number of concrete mixes in a wide range of situations to a high level of accuracy. And this is key, as it builds trust to experiment with lower-carbon mixes. “With projects in the UK and Australia, we’ve helped people tweak the mix that they’re using and achieve quite major carbon savings,” says Scheps. “Anywhere from 10% all the way up to 44%.”

Currently used to recommend existing cost-saving concrete recipes, Scheps sees Converge’s AI model becoming more sophisticated over time. “As it starts to uncover the real fundamental physics-based rules for what drives concrete chemistry, our model will make projections for entirely new materials,” he enthuses.

Also exploring the power of AI to optimize concrete production is US company Concrete.ai. Like Converge, Concrete.ai was born from the idea of applying physics principles to optimize traditional materials and industries; specifically, how AI can be used to reduce the carbon footprint of concrete. And also like Converge, the company’s technology rests on one of the world’s largest concrete databases, consisting of vast amounts of different recipes and materials, alongside their associated performances.

Trained on this dataset, Concrete.ai’s generative AI model creates millions of possible mix designs to identify the optimal concrete recipe for any particular application. “The main difference between a solution like Concrete.ai’s and general models like ChatGPT or Gemini is that our goal is really to create recipes that don’t exist yet,” explains chief technology officer and co-founder Mathieu Bauchy. “Popular large language models regurgitate what they have been trained on and tend to hallucinate, whereas our model discovers new recipes that have never been produced before without breaking the laws of physics or chemistry, and in a reliable way.”

Bauchy sees Concrete.ai’s role as a bridge between concrete producers keen to cut their costs and carbon footprint, and innovators like Adanu or the MIT group exploring new low-carbon concrete materials who are unable to demonstrate the performance of these materials in real-world scenarios and at scale.

Circular benefits

It is perhaps apt that the industry most in need of AI insights from the likes of Converge, Concrete.ai and their growing number of competitors is the AI industry itself. New data centres being used to train, deploy and deliver AI applications and services are the cause of a huge spike in the greenhouse gas emissions of tech giants such as Google, Meta, Microsoft and Amazon. And one of the biggest contributors to those emissions is the concrete from which these hyperscale facilities are built.

This is the reason Meta recently partnered with concrete maker Amrize to develop AI-optimized concrete. For Meta’s new 66,500 m2 data centre in Rosemount, Minnesota, the partners applied Meta’s AI models and Amrize’s materials-engineering expertise to deliver concrete that met key criteria including high strength and low carbon content, as well as practical performance characteristics like decent cure speed and surface quality. The partners estimate that the custom mix will reduce the total carbon footprint of this concrete by 35%.

“There is an interesting synergy between concrete and AI,” says Bauchy. “AI can help design greener concrete, and on the other hand, concrete can be used to build more sustainable data centres to power AI.” With other tech giants exploring AI’s potential in reducing the carbon footprint of the concrete they use too, it may well be that the very places in which AI is developed become the testbeds for AI-derived sustainable green concrete solutions.

The post Green concrete: paving the way for sustainable structures appeared first on Physics World.

Ancient artifacts hint at earliest protowriting

Hubble spotted a ‘dark galaxy’ that’s at least 99.9% dark matter

Boeing demonstrates large language model for space-grade hardware

SAN FRANCISCO – Before uploading a large language model to space-grade hardware, Boeing Space Mission Systems engineers sought guidance from the hardware manufacturer. “They told us it wasn’t possible, but we are skilled engineers who were going to figure out a pathway to make it happen,” Arvel Chappell III, Boeing Space Mission Systems AI Lab […]

The post Boeing demonstrates large language model for space-grade hardware appeared first on SpaceNews.

Meink, Saltzman make case for Space Force expansion

Meink: How the future force is designed ‘will be critical as the Space Force expands even faster in the next few years’

The post Meink, Saltzman make case for Space Force expansion appeared first on SpaceNews.

Uncovering the Mystery Behind Cold Sensation and Menthol’s Cooling Effect

40,000-Year-Old Stone Age Symbols May Be a Precursor to Written Language