How Reliable Is the Science on Microplastics in the Human Body? Some Experts Urge Caution

Eutelsat has completed the last step in a 5 billion euro ($5.8 billion) refinancing plan to refresh its OneWeb constellation and support Europe’s IRIS² sovereign connectivity program, the French satellite operator announced March 6.

The post Eutelsat completes $5.8 billion refinancing plan appeared first on SpaceNews.

Careers in Quantum, which was held on 5 March 2026, is an unusual event. Now in its seventh year, it’s entirely organised by PhD students who are part of the Quantum Engineering Centre for Doctoral Training (CDT) at the University of Bristol in the UK.

As well as giving them valuable practical experience of creating an event featuring businesses in the burgeoning quantum sector, it also lets them build links with the very firms they – and the students and postdocs who attended – might end up working for.

A clever win-win if you like, with the day featuring talks, panel discussion and a careers fair made up companies such as Applied Quantum Computing, Duality, Hamamatsu, Orca Computing, Phasecraft, QphoX, Riverlane, Siloton and Sparrow Quantum.

IOP Publishing featured too with Antigoni Messaritaki talking about her journey from researcher to senior publisher and Physics World features and careers editor Tushna Commissariat taking part in a panel discussion on careers in quantum.

The importance of communication and other “soft skills” was emphasized by all speakers in the discussion, but what struck me most was a comment by Carrie Weidner, a lecturer in quantum engineering at Bristol, who underlined that it’s fine – in fact important – to learn to fail.

“If you’re resilient and can think critically, you can do anything,” said Weidner, who is also director of the quantum-engineering CDT. She warned too of the dangers of generative AI, joking that “every time you use ChatGPT, your brain is astrophying”.

Another great talk was by Diya Nair, a computer-science undergraduate at the University of Birmingham, who is head of global outreach and UK ambassador for Girls in Quantum.

The organization is now active in almost 70 countries around the world, with the aim of “democratizing quantum education”. As Nair explained, Girls in Quantum does everything from arrange quantum computing courses and hackathons to creating its crowdfunded quantum-computing game called Hop.

The event also included a discussion about taking quantum research “from concept to commercialization”. It featured Jack Russel Bruce from Universal Quantum, Euan Allen from eye-imaging tech firm Siloton, Joe Longden from Duality Quantum Photonics, and Stewart Noakes, who has mentored numerous companies over the years.

Noakes emphasized that all high-tech firms have three main needs: talent, money and ideas. In fact, as he explained, companies can soemtimes suffer from having too much money as well as too little, especially if they grow too fast and hire people on big salaries who might then need to be let go if funding dries up.

Bruce, though, was positive about the overall state of the quatum-tech sector. “For me, the future is bright,” he said. But as all speakers underlined, if you want to join the industry, make sure you’ve got good communciation skills, an open-minded attitude – and a willingness to learn on the go.

The post Pathways to a career in quantum: what skills do you need? appeared first on Physics World.

March 6, 2026 – Washington, D.C.—The Commercial Space Federation (CSF) is pleased to welcome Leolabs, the American Society for Gravitational and Space Research (ASGSR), and SurgeStreams. Together, these organizations strengthen […]

The post Commercial Space Federation (CSF) Welcomes New Members appeared first on SpaceNews.

Amid the explosive growth surrounding telecommunications megaconstellations, orbital data centers and next-generation payloads, the space ecosystem is entering a period of rapid and irreversible change. Announcements and filings for satellite constellations numbering in the tens of thousands, hundreds of thousands and now even 1 million-plus are becoming commonplace. The waves that even a fraction of […]

The post Hyperscalers are coming to an orbit near you. Power will decide the winners. appeared first on SpaceNews.

Rocket Lab launched a spacecraft March 5 for a confidential customer, most likely Earth observation company BlackSky.

The post Rocket Lab launches satellite for undisclosed customer appeared first on SpaceNews.

China has designated aerospace to be an “emerging pillar industry” in a draft national economic plan, also setting major objectives for the five years ahead.

The post China designates space sector an “emerging pillar industry,” sets deep space ambitions in new economic blueprint appeared first on SpaceNews.

MRI is one of the most important imaging tools employed in medical diagnostics. But for deep-lying tissues or complex anatomic features, MRI can struggle to create clear images in a reasonable scan time. A research team led by Thoralf Niendorf at the Max Delbrück Center in Germany is using metamaterials to create a compact radiofrequency (RF) antenna that enhances image quality and enables faster MRI scanning.

Imaging the subtle structures of the eye and orbit (the surrounding eye socket) is a particular challenge for MRI, due to the high spatial resolution and small fields-of-view required, which standard MRI systems struggle to achieve. These limitations are generally due to the antennas (or RF coils) that transmit and receive the RF signals. Increasing the sensitivity of these antennas will increase signal strength and improve the resolution of the resulting MR images.

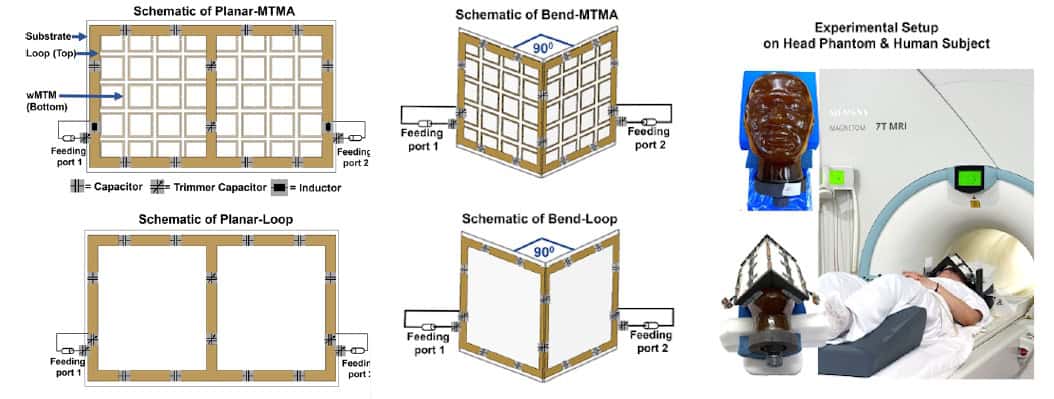

To achieve this, Niendorf and colleagues turned to electromagnetic metamaterials – artificially manufactured, regularly arranged structures made of periodic subwavelength unit cells (UCs) that interact with electromagnetic waves in ways that natural materials do not. They designed the metamaterial UCs based on a double-square split-ring resonator design, tailored for operation at a high magnetic field strength of 7.0 T.

In their latest study, led by doctoral student Nandita Saha and reported in Advanced Materials, the researchers created a metamaterial-integrated RF antenna (MTMA) by fabricating the UCs into a 5 x 8 array. They built two configurations: a planar antenna (planar-MTMA); and a version with a 90° bend in the centre (bend-MTMA) to conform to the human face. For comparison, they also built conventional counterparts without the metamaterial (planar-loop and bend-loop).

The researchers simulated the MRI performances of the four antennas and validated their findings via measurements at 7.0 T. Tests in a rectangular phantom showed that the planar-MTMA demonstrated between 14% and 20% higher transmit efficiency than the planar-loop (assessed via B₁+ mapping).

They next imaged a head phantom, placing planar antennas behind the head to image the occipital lobe (the part of the brain involved in visual processing) and bend antennas over the eyes for ocular imaging. For the planar antennas, B₁+ mapping revealed that the planar-MTMA generated around 21% (axial), 19% (sagittal) and 13% (coronal) higher intensity than the planar-loop. Gradient-echo imaging showed that planar-MTMA also improved the receive sensitivity, by 106% (axial), 94% (sagittal) and 132% (coronal).

The bend antennas exhibited similar trends, with B₁+ maps showing transmit gains of roughly 20% for the bend-MTMA over the bend-loop. The bend-MTMA also outperformed the bend-loop in terms of receive signal intensity, by approximately 30%.

“With the metamaterials we developed, we were able to guide and modulate the RF fields generated in MRI more efficiently,” says Niendorf. “By integrating metamaterials into MRI antennas, we created a new type of transmitter and detector hardware that increases signal strength from the target tissue, improves image sharpness and enables faster data acquisition.”

Importantly, the new MRI antenna design is compatible with existing MRI scanners, meaning that no new infrastructure is needed for use in the clinic. The researchers validated their technology in a group of volunteers, working closely with partners at Rostock University Medical Center.

Before use on human subjects, the researchers evaluated the MRI safety of the four antennas. All configurations remained well below the IEC’s specific absorption rate (SAR) limit. They also assessed the bend-MTMA (which showed the highest SAR) using MR thermometry and fibre optic sensors. After 30 min at 10 W input power, the temperature increased by about 1.5°C. At 5 W, the increase was below 0.5°C, well within IEC safety thresholds and thus used for the in vivo MRI exams.

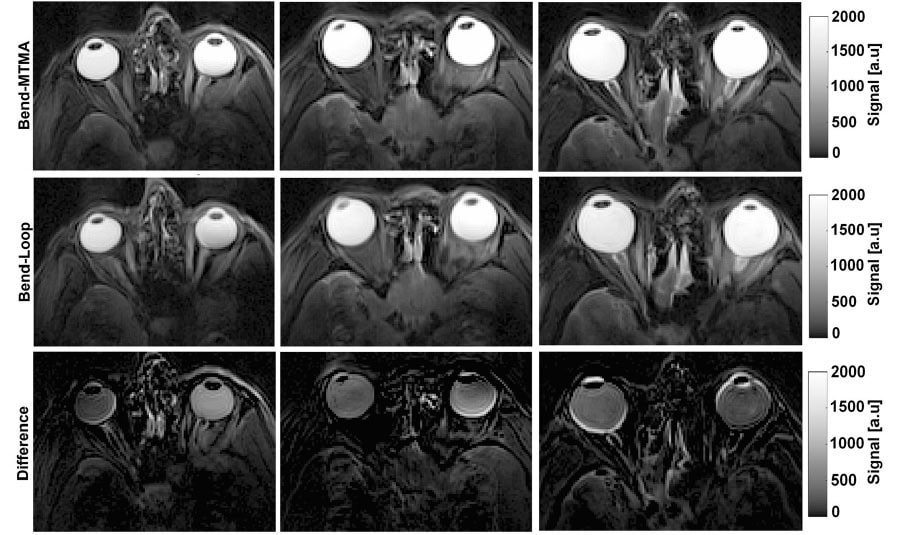

The team first performed MRI of the eye and orbit in three healthy adults, using the bend-loop and bend-MTMA antennas positioned over the eyes. Across all volunteers, the bend-MTMA exhibited better transmit performance in the ocular region that the bend-loop.

The bend-MTMA antenna also generated larger intraocular signals than the bend-loop (assessed via T2-weighted turbo spin-echo imaging), with signal increases of 51%, 28% and 25% in the left eyes, for volunteers 1, 2 and 3, respectively, and corresponding gains of 27%, 26% and 29% for their right eyes. Overall, the bend-MTMA provided more uniform and higher-intensity signal coverage of the ocular region at 7.0 T than the bend-loop.

To further demonstrate clinical application of the bend-MTMA, the team used it to image a volunteer with a retinal haemangioma in their left eye. A 7.0 T MRI scan performed 16 days after treatment revealed two distinct clusters of structural change due to the therapy. In addition, one of the volunteer’s ocular scans revealed a sinus cyst, an unexpected finding that showed the diagnostic benefit of the bend-MTMA being able to image beyond the orbit and into the paranasal sinuses and inferior frontal lobe.

The team used the planar antennas to image the occipital lobe, a clinically relevant target for neuro-ophthalmic examinations. The planar-MTMA exhibited significantly higher transmit efficiency than the planar-loop, as well as higher signal intensity and wider coverage, enhancing the anatomical depiction of posterior brain regions.

“Clearer signals and better images could open new doors in diagnostic imaging,” says Niendorf. “Early ophthalmology applications could include diagnostic confirmation of ambiguous ophthalmoscopic findings, visualization and local staging of ocular masses, 3D MRI, fusion with colour Doppler ultrasound, and physio-metabolic imaging to probe iron concentration or water diffusion in the eye.”

He notes that with slight modifications, the new antennas could enable MRI scans depicting the release and transport of drugs within the body. Their geometry and design could also be tuned to image organs such as the heart, kidneys or brain. “Another pioneering clinical application involves thermal magnetic resonance, which adds a thermal intervention dimension to an MRI device and integrates diagnostic guidance, thermal treatment and therapy monitoring facilitated by metamaterial RF antenna arrays,” he tells Physics World.

The post Metamaterial antennas enhance MR images of the eye and brain appeared first on Physics World.

WARSAW — Polish chemical propulsion startup Liftero has signed a deal with India’s commercial in-orbit servicing specialist OrbitAID where Liftero will supply green chemical propulsion for OrbitAID’s in-orbit servicing spacecraft. Under the contract, Liftero will supply two multi-thruster BOOSTER configurations for an upcoming OrbitAID mission expected in the fourth quarter of 2026. The mission will […]

The post Poland-based Liftero will provide chemical propulsion for Indian firm OrbitAID’s in-orbit servicing mission appeared first on SpaceNews.

The White House’s nominee to be deputy administrator of NASA received bipartisan support at a Senate confirmation hearing March 5.

The post NASA deputy administrator nominee sails through confirmation hearing appeared first on SpaceNews.

IRVINE, Calif., March 5, 2026 — Terran Orbital, a Lockheed Martin Company and a leading satellite solutions provider, announced today the appointment of Kwon Park as senior director of manufacturing […]

The post Terran Orbital Appoints Kwon Park as Senior Director of Manufacturing Operations appeared first on SpaceNews.