New diffractive camera hides images from view

Information security is an important part of our digital world, and various techniques have been deployed to keep data safe during transmission. But while these traditional methods are efficient, the mere existence of an encrypted message can alert malicious third parties to the presence of information worth stealing. Researchers at the University of California, Los Angeles (UCLA), US, have now developed an alternative based on steganography, which aims to hide information by concealing it within ordinary-looking “dummy” patterns. The new method employs an all-optical diffractive camera housed within an electronic decoder network that the intended receiver can use to retrieve the original image.

“Cryptography and steganography have long been used to protect sensitive data, but they have limitations, especially in terms of data embedding capacity and vulnerability to compression and noise,” explains Aydogan Ozcan, a UCLA electrical and computer engineer who led the research. “Our optical encoder-electronic decoder system overcomes these issues, providing a faster, more energy-efficient and scalable solution for information concealment.”

A seemingly mundane and misleading pattern

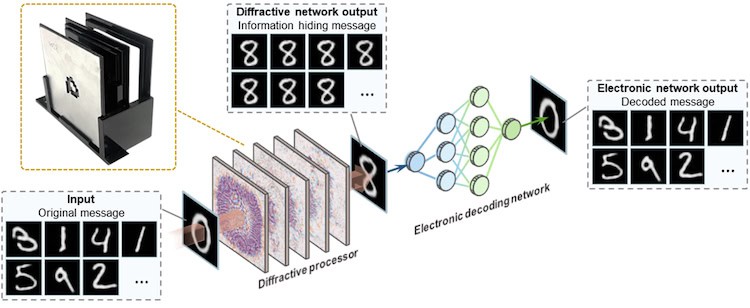

The image-hiding process starts with a diffractive optical process that takes place in a structure composed of multiple layers of 3D-printed features. Light passing through these layers is manipulated to transform the input image into a seemingly mundane and misleading pattern. “The optical transformation happens passively,” says Ozcan, “leveraging light-matter interactions. This means it requires no additional power once physically fabricated and assembled.”

The result is an encoded image that appears ordinary to human observers, but contains hidden information, he tells Physics World.

The encoded image is then processed by an electronic decoder, which uses a convolutional neural network (CNN) that has been trained to decode the concealed data and reconstruct the original image. This optical-to-digital co-design ensures that only someone with the appropriate digital decoder can retrieve the hidden information, making it a secure and efficient method of protecting visual data.

A secure and efficient method for visual data protection

The researchers tested their technique using arbitrarily chosen hand-written digits as the input image. The diffractive processor successfully transformed these into a uniform-looking digit 8. The CNN was then able to reconstruct the original handwritten digits using information “hidden” in the 8.

All was not plain sailing, however, explains Ozcan. For one, the UCLA researchers had to ensure that the digital decoder could accurately reconstruct the original images despite the transformations applied by the diffractive optical processor. They also had to show that the device worked under different lighting conditions.

“Fabricating precise diffractive layers was no easy task either and meant developing the necessary 3D printing techniques to create highly precise structures that can perform the required optical transformations,” Ozcan says.

The technique, which is detailed in Science Advances, could have several applications. Being able to transmit sensitive information securely without drawing attention could be useful for espionage or defence, Ozcan suggests. The security of the technique and its suitability for image transmission might also improve patient privacy by making it easier to safely transmit medical images that only authorized personnel can access. A third application would be to use the technique to improve the robustness and security of data transmitted over optical networks, including free-space optical communications. A final application lies in consumer electronics. “Our device could potentially be integrated into smartphones and cameras to protect users’ visual data from unauthorized access,” Ozcan says.

The researchers demonstrated that their system works for terahertz frequencies of light. They now aim to expand its capabilities so that it can work with different wavelengths of light, including visible and infrared, which would broaden the scope of its applications. “Another area [for improvement] is in miniaturization to further reduce the size of the diffractive optical elements to make the technology more compact and scalable for commercial applications,” Ozcan says.

The post New diffractive camera hides images from view appeared first on Physics World.