Ugreen NASync DH2300 Review

UK artist Alison Stott has created a new glass and light artwork – entitled Naturally Focused – that is inspired by the work of theoretical physicist Michael Berry from the University of Bristol.

Stott, who recently competed an MA in glass at Arts University Plymouth, spent over two decades previously working in visual effects for film and television, where she focussed on creating photorealistic imagery.

Her studies touched on how complex phenomena can arise from seemingly simple set-ups, for example in a rotating glass sculpture lit by LEDs.

“My practice inhabits the spaces between art and science, glass and light, craft and experience,” notes Stott. “Working with molten glass lets me embrace chaos, indeterminacy, and materiality, and my work with caustics explores the co-creation of light, matter, and perception.”

The new artwork is based on “caustics” – the curved patterns that form when light is reflected or refracted by curved surfaces or objects

The focal point of the artwork is a hand-blown glass lens that was waterjet-cut into a circle and polished so that its internal structure and optical behaviour are clearly visible. The lens is suspended within stainless steel gyroscopic rings and held by a brass support and stainless stell backplate.

The rings can be tilted or rotated to “activate shifting field of caustic projections that ripple across” the artwork. Mathematical equations are also engraved onto the brass that describe the “singularities of light” that are visible on the glass surface.

The work is inspired by Berry’s research into the relationship between classical and quantum behaviour and how subtle geometric structures govern how waves and particles behave.

Berry recently won the 2025 Isaac Newton Medal and Prize, which is presented by the Institute of Physics, for his “profound contributions across mathematical and theoretical physics in a career spanning over 60 years”.

Stott says that working with Berry has pushed her understanding of caustics. “The more I learn about how these structures emerge and why they matter across physics, the more compelling they become,” notes Stott. “My aim is to let the phenomena speak for themselves, creating conditions where people can directly encounter physical behaviour and perhaps feel the same awe and wonder I do.”

The artwork will go on display at the University of Bristol following a ceremony to be held on 27 November.

The post ‘Caustic’ light patterns inspire new glass artwork appeared first on Physics World.

WiFi networks could pose significant privacy risks even to people who aren’t carrying or using WiFi-enabled devices, say researchers at the Karlsruhe Institute of Technology (KIT) in Germany. According to their analysis, the current version of the technology passively records information that is detailed enough to identify individuals moving through networks, prompting them to call for protective measures in the next iteration of WiFi standards.

Although wireless networks are ubiquitous and highly useful, they come with certain privacy and security risks. One such risk stems from a phenomenon known as WiFi sensing, which the researchers at KIT’s Institute of Information Security and Dependability (KASTEL) define as “the inference of information about the networks’ environment from its signal propagation characteristics”.

“As signals propagate through matter, they interfere with it – they are either transmitted, reflected, absorbed, polarized, diffracted, scattered, or refracted,” they write in their study, which is published in the Proceedings of the 2025 ACM SIGSAC Conference on Computer and Communications Security (CCS ’25). “By comparing an expected signal with a received signal, the interference can be estimated and used for error correction of the received data.”

An under-appreciated consequence, they continue, is that this estimation contains information about any humans who may have unwittingly been in the signal’s path. By carefully analysing the signal’s interference with the environment, they say, “certain aspects of the environment can be inferred” – including whether humans are present, what they are doing and even who they are.

The KASTEL team terms this an “identity inference attack” and describes it as a threat that is as widespread as it is serious. “This technology turns every router into a potential means for surveillance,” says Julian Todt, who co-led the study with his KIT colleague Thorsten Strufe. “For example, if you regularly pass by a café that operates a WiFi network, you could be identified there without noticing it and be recognized later – for example by public authorities or companies.”

While Todt acknowledges that security services, cybercriminals and others do have much simpler ways of tracking individuals – for example by accessing data from CCTV cameras or video doorbells – he argues that the ubiquity of wireless networks lends itself to being co-opted as a near-permanent surveillance infrastructure. There is, he adds, “one concerning property” about wireless networks: “They are invisible and raise no suspicion.”

Although the possibility of using WiFi networks in this way is not new, most previous WiFi-based security attacks worked by analysing so-called channel state information (CSI). These data indicate how a radio signal changes when it reflects off walls, furniture, people or animals. However, the KASTEL researchers note that the latest WiFi standard, known as WiFi 5 (802.11ac), changes the picture by enabling a new and potentially easier form of attack based on beamforming feedback information (BFI).

While beamforming uses similar information as CSI, Todt explains that it does so on the sender’s side instead of the receiver’s. This means that a BFI-based surveillance method would require nothing more than standard devices connected to the WiFi network. “The BFI could be used to create images from different perspectives that might then serve to identify persons that find themselves in the WiFi signal range,” Todt says. “The identity of individuals passing through these radio waves could then be extracted using a machine-learning model. Once trained, this model would make an identification in just a few seconds.”

In their experiments, Todt and colleagues studied 197 participants as they walked through a WiFi field while being simultaneously recorded with CSI and BFI from four different angles. The participants had five different “walking styles” (such as walking normally and while carrying a backpack) as well as different gaits. The researchers found that they could identify individuals with nearly 100% accuracy, regardless of the recording angle or the individual’s walking style or gait.

“The technology is powerful, but at the same time entails risks to our fundamental rights, especially to privacy,” says Strufe. He warns that authoritarian states could use the technology to track demonstrators and members of opposition groups, prompting him and his colleagues to “urgently call” for protective measures and privacy safeguards to be included in the forthcoming IEEE 802.11bf WiFi standard.

“The literature on all novel sensing solutions highlights their utility for various novel applications,” says Todt, “but the privacy risks that are inherent to such sensing are often overlooked, or worse — these sensors are claimed to be privacy-friendly without any rationale for these claims. As such, we feel it necessary to point out the privacy risks that novel solutions such as WiFi sensing bring with them.”

The researchers say they would like to see approaches developed that can mitigate the risk of identity inference attack. However, they are aware that this will be difficult, since this type of attack exploits the physical properties of the actual WiFi signal. “Ideally, we would influence the WiFi standard to contain privacy-protections in future versions,” says Todt, “but even the impact of this would be limited as there are already millions of WiFi devices out there that are vulnerable to such an attack.”

The post Is your WiFi spying on you? appeared first on Physics World.

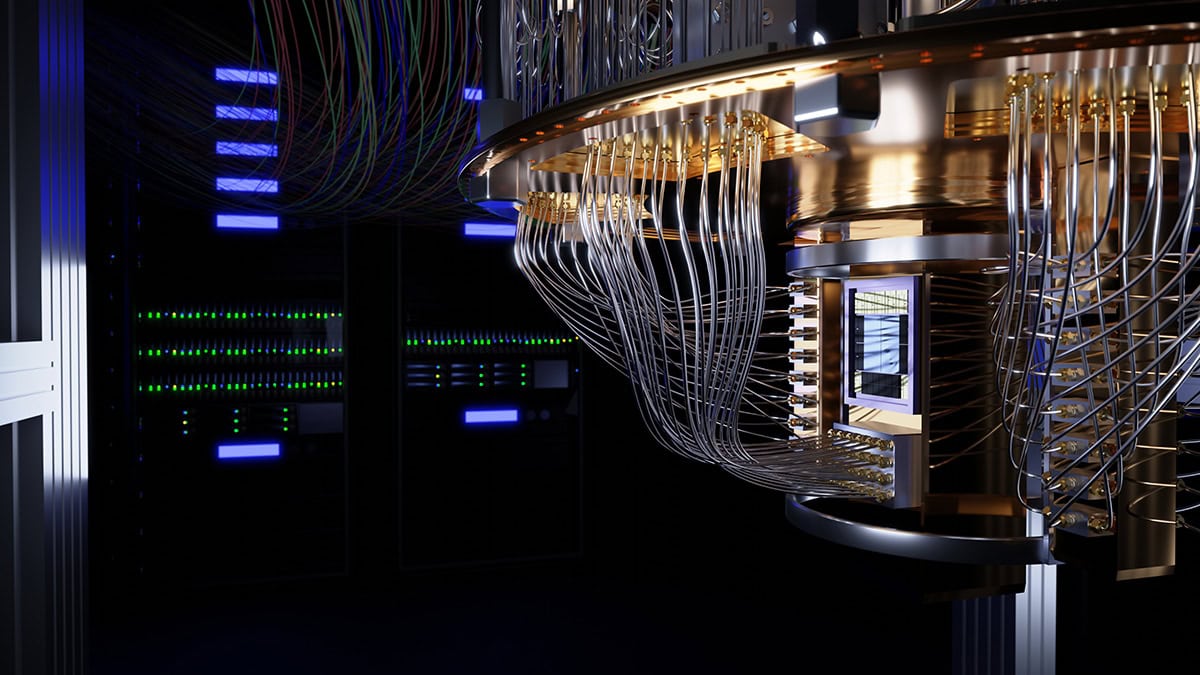

The standardization process helps to promote the legitimacy of emerging quantum technologies by distilling technical inputs and requirements from all relevant stakeholders across industry, research and government. Put simply: if you understand a technology well enough to standardize elements of it, that’s when you know it’s moved beyond hype and theory into something of practical use for the economy and society.

Standards will, over time, help the quantum technology industry achieve critical mass on the supply side, with those economies of scale driving down prices and increasing demand. As the nascent quantum supply chain evolves – linking component manufacturers, subsystem developers and full-stack quantum computing companies – standards will also ensure interoperability between products from different vendors and different regions.

Those benefits flow downstream as well because standards, when implemented properly, increase trust among end-users by defining a minimum quality of products, processes and services. Equally important, as new innovations are rolled out into the marketplace by manufacturers, standards will ensure compatibility across current and next-generation quantum systems, reducing the likelihood of lock-ins to legacy technologies.

I have strategic oversight of our core technical programmes in quantum computing, quantum networking, quantum metrology and quantum-enabled PNT (position, navigation and timing). It’s a broad-scope remit that spans research, training as well as responsibility for standardization and international collaboration, with the latter often going hand-in-hand.

Right now, we have over 150 people working within the NPL quantum metrology programme. Their collective focus is on developing the measurement science necessary to build, test and evaluate a wide range of quantum devices and systems. Our research helps innovators, whether in an industry or university setting, to push the limits of quantum technology by providing leading-edge capabilities and benchmarking to measure the performance of new quantum products and services.

That’s right. For starters, we have a team focusing on the inter-country strategic relationships, collaborating closely with colleagues at other National Metrology Institutes (like NIST in the US and PTB in Germany). A key role in this regard is our standards specialist who, given his background working in the standards development organizations (SDOs), acts as a “connector” between NPL’s quantum metrology teams and, more widely, the UK’s National Quantum Technology Programme and the international SDOs.

We also have a team of technical experts who sit on specialist working groups within the SDOs. Their inputs to standards development are not about NPL’s interests, rather providing expertise and experience gained from cutting-edge metrology; also building a consolidated set of requirements gathered from stakeholders across the quantum community to further the UK’s strategic and technical priorities in quantum.

Absolutely. We believe that quantum metrology and standardization are key enablers of quantum innovation, fast-tracking the adoption and commercialization of quantum technologies while building confidence among investors and across the quantum supply chain and early-stage user base. For NPL and its peers, the task right now is to agree on the terminology and best practice as we figure out the performance metrics, benchmarks and standards that will enable quantum to go mainstream.

Front-and-centre is the UK Quantum Standards Network Pilot. This initiative – which is being led by NPL – brings together representatives from industry, academia and government to work on all aspects of standards development: commenting on proposals and draft standards; discussing UK standards policy and strategy; and representing the UK in the European and international SDOs. The end-game? To establish the UK as a leading voice in quantum standardization, both strategically and technically, and to ensure that UK quantum technology companies have access to global supply chains and markets.

The Quantum Standards Network Pilot also provides a direct line to prospective end-users of quantum technologies in business sectors like finance, healthcare, pharmaceuticals and energy. What’s notable is that the end-users are often preoccupied with questions that link in one way or another to standardization. For example: how well do quantum technologies stack up against current solutions? Are quantum systems reliable enough yet? What does quantum cost to implement and maintain, including long-term operational costs? Are there other emerging technologies that could do the same job? Is there a solid, trustworthy supply chain?

The quantum landscape is changing fast, with huge scope for disruptive innovation in quantum computing, quantum communications and quantum sensing. Faced with this level of complexity, NMI-Q leverages the combined expertise of the world’s leading National Metrology Institutes – from the G7 countries and Australia – to accelerate the development and adoption of quantum technologies.

No one country can do it all when it comes to performance metrics, benchmarks and standards in quantum science and technology. As such, NMI-Q’s priorities are to conduct collaborative pre-standardization research; develop a set of “best measurement practices” needed by industry to fast-track quantum innovation; and, ultimately, shape the global standardization effort in quantum. NPL’s prominent role within NMI-Q (I am the co-chair along with Barbara Goldstein of NIST) underscores our commitment to evidence-based decision-making in standards development and, ultimately, to the creation of a thriving quantum ecosystem.

Every day, our measurement scientists address cutting-edge problems in quantum – as challenging as anything they’ll have encountered previously in an academic setting. What’s especially motivating, however, is that the NPL is a mission-driven endeavour with measurement outcomes linking directly to wider societal and economic benefits – not just in the UK, but internationally as well.

Measurement for Quantum (M4Q) is a flagship NPL programme that provides industry partners with up to 20 days of quantum metrology expertise to address measurement challenges in applied R&D and product development. The service – which is free of charge for projects approved after peer review – helps companies to bridge the gap from technology prototype to full commercialization.

To date, more than two-thirds of the companies to participate in M4Q report that their commercial opportunity has increased as a direct result of NPL support. In terms of specifics, the M4Q offering includes the following services:

Performance metrics and benchmarks point the way to practical quantum advantage

End note: NPL retains copyright on this article.

The post Why quantum metrology is the driving force for best practice in quantum standardization appeared first on Physics World.

In proton exchange membrane water electrolysis (PEMWE) systems, voltage cycles dropping below a threshold are associated with reversible performance improvements, which remain poorly understood despite being documented in literature. The distinction between reversible and irreversible performance changes is crucial for accurate degradation assessments. One approach in literature to explain this behaviour is the oxidation and reduction of iridium. Iridium-based electrocatalyst activity and stability in PEMWE hinge on their oxidation state, influenced by the applied voltage. Yet, full-cell PEMWE dynamic performance remains under-explored, with a focus typically on stability rather than activity. This study systematically investigates reversible performance behaviour in PEMWE cells using Ir-black as an anodic catalyst. Results reveal a recovery effect when the low voltage level drops below 1.5 V, with further enhancements observed as the voltage decreases, even with a short holding time of 0.1 s. This reversible recovery is primarily driven by improved anode reaction kinetics, likely due to changing iridium oxidation states, and is supported by alignment between the experimental data and a dynamic model that links iridium oxidation/reduction processes to performance metrics. This model allows distinguishing between reversible and irreversible effects and enables the derivation of optimized operation schemes utilizing the recovery effect.

Tobias Krenz is a simulation and modelling engineer at Siemens Energy in the Transformation of Industry business area focusing on reducing energy consumption and carbon-dioxide emissions in industrial processes. He completed his PhD from Liebniz University Hannover in February 2025. He earned a degree from Berlin University of Applied Sciences in 2017 and a MSc from Technische Universität Darmstadt in 2020.

Alexander Rex is a PhD candidate at the Institute of Electric Power Systems at Leibniz University Hannover. He holds a degree in mechanical engineering from Technische Universität Braunschweig, an MEng from Tongji University, and an MSc from Karlsruhe Institute of Technology (KIT). He was a visiting scholar at Berkeley Lab from 2024 to 2025.

The post Reversible degradation phenomenon in PEMWE cells appeared first on Physics World.

Ramy Shelbaya has been hooked on physics ever since he was a 12-year-old living in Egypt and read about the Joint European Torus (JET) fusion experiment in the UK. Biology and chemistry were interesting to him but never quite as “satisfying”, especially as they often seemed to boil down to physics in the end. “So I thought, maybe that’s where I need to go,” Shelbaya recalls.

His instincts seem to have led him in the right direction. Shelbaya is now chief executive of Quantum Dice, an Oxford-based start-up he co-founded in 2020 to develop quantum hardware for exploiting the inherent randomness in quantum mechanics. It closed its first funding round in 2021 with a seven-figure investment from a consortium of European investors, while also securing grant funding on the same scale.

Now providing cybersecurity hardware systems for clients such as BT, Quantum Dice is launching a piece of hardware for probabilistic computing, based on the same core innovation. Full of joy and zeal for his work, Shelbaya admits that his original decision to pursue physics was “scary”. Back then, he didn’t know anyone who had studied the subject and was not sure where it might lead.

Fortunately, Shelbaya’s parents were onboard from the start and their encouragement proved “incredibly helpful”. His teachers also supported him to explore physics in his extracurricular reading, instilling a confidence in the subject that eventually led Shelbaya to do undergraduate and master’s degrees in physics at École normale supérieure PSL in France.

He then moved to the UK to do a PhD in atomic and laser physics at the University of Oxford. Just as he was wrapping up his PhD, Oxford University Innovation (OUI) – which manages its technology transfer and consulting activities – launched a new initiative that proved pivotal to Shelbaya’s career.

OUI had noted that the university generated a lot of IP and research results that could be commercialized but that the academics producing it often favoured academic work over progressing the technology transfer themselves. On the other hand, lots of students were interested in entering the world of business.

To encourage those who might be business-minded to found their own firms, while also fostering more spin-outs from the university’s patents and research, OUI launched the Student Entrepreneurs’ Programme (StEP). A kind of talent show to match budding entrepreneurs with technology ready for development, StEP invited participants to team up, choose commercially promising research from the university, and pitch for support and mentoring to set up a company.

As part of Oxford’s atomic and laser physics department, Shelbaya was aware that it had been developing a quantum random number generator. So when the competition was launched, he collaborated with other competition participants to pitch the device. “My team won, and this is how Quantum Dice was born.”

The initial technology was geared towards quantum random number generation, for particular use in cybersecurity. Random numbers are at the heart of all encryption algorithms, but generating truly random numbers has been a stumbling block, with the “pseudorandom” numbers people make do with being prone to prediction and hence security violation.

Quantum mechanics provides a potential solution because there is inherent randomness in the values of certain quantum properties. Although for a long time this randomness was “a bane to quantum physicists”, as Shelbaya puts it, Quantum Dice and other companies producing quantum random number generators are now harnessing it for useful technologies.

Where Quantum Dice sees itself as having an edge over its competitors is in its real-time quality assurance of the true quantum randomness of the device’s output. This means it should be able to spot any corruption to the output due to environmental noise or someone tampering with the device, which is an issue with current technologies.

Quantum Dice already offers Quantum Random Number Generator (QRNG) products in a range of form factors that integrate directly within servers, PCs and hardware security systems. Clients can also access the company’s cloud-based solution – Quantum Entropy-as-a-Service – which, powered by its QRNG hardware, integrates into the Internet of Things and cloud infrastructure.

Recently Quantum Dice has also launched a probabilistic computing processor based on its QRNG for use in algorithms centred on probabilities. These are often geared towards optimization problems that apply in a number of sectors, including supply chains and logistics, finance, telecommunications and energy, as well as simulating quantum systems, and Boltzmann machines – a type of energy-based machine learning model for which Shelbaya says researchers “have long sought efficient hardware”.

After winning the start-up competition in 2019 things got trickier when Quantum Dice was ready to be incorporated, which occurred just at the start of the first COVID-19 lockdown. Shelbaya moved the prototype device into his living room because it was the only place they could ensure access to it, but it turned out the real challenges lay elsewhere.

“One of the first things we needed was investments, and really, at that stage of the company, what investors are investing in is you,” explains Shelbaya, highlighting how difficult this is when you cannot meet in person. On the plus side, since all his meetings were remote, he could speak to investors in Asia in the morning, Europe in the afternoon and the US in the evening, all within the same day.

Another challenge was how to present the technology simply enough so that people would understand and trust it, while not making it seem so simple that anyone could be doing it. “There’s that sweet spot in the middle,” says Shelbaya. “That is something that took time, because it’s a muscle that I had never worked.”

The company performed well for its size and sector in terms of securing investments when their first round of funding closed in 2021. Shelbaya is shy of attributing the success to his or even the team’s abilities alone, suggesting this would “underplay a lot of other factors”. These include the rising interest in quantum technologies, and the advantages of securing government grant funding programmes at the same time, which he feels serves as “an additional layer of certification”.

For Shelbaya every day is different and so are the challenges. Quantum Dice is a small new company, where many of the 17 staff are still fresh from university, so nurturing trust among clients, particularly in the high-stakes world of cybersecurity is no small feat. Managing a group of ambitious, energetic and driven young people can be complicated too.

But there are many rewards, ranging from seeing a piece of hardware finally work as intended and closing a deal with a client, to simply seeing a team “you have been working to develop, working together without you”.

For others hoping to follow a similar career path, Shelbaya’s advice is to do what you enjoy – not just because you will have fun but because you will be good at it too. “Do what you enjoy,” he says, “because you will likely be great at it.”

The post Ramy Shelbaya: the physicist and CEO capitalizing on quantum randomness appeared first on Physics World.

Researchers in the US and Korea have created nanoparticles with carefully designed “patches” on their surfaces using a new atomic stencilling technique. These patches can be controlled with incredible precision, and could find use in targeted drug delivery, catalysis, microelectronics and tissue engineering.

The first step in the stencilling process is to create a mask on the surface of gold nanoparticles. This mask prevents a “paint” made from grafted-on polymers from attaching to certain areas of the nanoparticles.

“We then use iodide ions as a stencil,” explains Qian Chen, a materials scientist and engineer at the University of Illinois at Urbana-Champaign, US, who led the new research effort. “These adsorb (stick) to the surface of the nanoparticles in specific patterns that depend on the shape and atomic arrangement of the nanoparticles’ facets. That’s how we create the patches – the areas where the polymers selectively bind.” Chen adds that she and her collaborators can then tailor the surface chemistry of these tiny patchy nanoparticles in a very controlled way.

The team decided to develop the technique after realizing there was a gap in the field of microfabrication stencilling. While techniques in this area have advanced considerably in recent years, allowing ever-smaller microdevices to be incorporated into ever-faster computer chips, most of them rely on top-down approaches for precisely controlling nanoparticles. By comparison, Chen says, bottom-up methods have been largely unexplored even though they are low-cost, solution-processable, scalable and compatible with complex, curved and three-dimensional surfaces.

Reporting their work in Nature, the researchers say they were inspired by the way proteins naturally self-assemble. “One of the holy grails in the field of nanomaterials is making complex, functional structures from nanoscale building blocks,” explains Chen. “It’s extremely difficult to control the direction and organization of each nanoparticle. Proteins have different surface domains, and thanks to their interactions with each other, they can make all the intricate machines we see in biology. We therefore adopted that strategy by creating patches or distinct domains on the surface of the nanoparticles.”

Philip Moriarty, a physicist of the University of Nottingham, UK who was not involved in the project, describes it as “elegant and impressive” work. “Chen and colleagues have essentially introduced an entirely new mode of self-assembly that allows for much greater control of nanoparticle interactions,” he says, “and the ‘atomic stencil’ concept is clever and versatile.”

The team, which includes researchers at the University of Michigan, Pennsylvania State University, Cornell, Brookhaven National Laboratory and Korea’s Chonnam National University as well as Urbana-Champaign, agrees that the potential applications are vast. “Since we can now precisely control the surface properties of these nanoparticles, we can design them to interact with their environment in specific ways,” explains Chen. “That opens the door for more effective drug delivery, where nanoparticles can target specific cells. It could also lead to new types of catalysts, more efficient microelectronic components and even advanced materials with unique optical and mechanical properties.”

She and her colleagues say they now want to extend their approach to different types of nanoparticles and different substrates to find out how versatile it truly is. They will also be developing computational models that can predict the outcome of the stencilling process – something that would allow them to design and synthesize patchy nanoparticles for specific applications on demand.

The post ‘Patchy’ nanoparticles emerge from new atomic stencilling technique appeared first on Physics World.

The $330m Jiangmen Underground Neutrino Observatory (JUNO) has released its first results following the completion of the huge underground facility in August.

JUNO is located in Kaiping City, Guangdong Province, in the south of the country around 150 km west of Hong Kong.

Construction of the facility began in 2015 and was set to be complete some five years later. Yet the project suffered from serious flooding, which delayed construction.

JUNO, which is expected to run for more than 30 years, aims to study the relationship between the three types of neutrino: electron, muon and tau. Although JUNO will be able to detect neutrinos produced by supernovae as well as those from Earth, the observatory will mainly measure the energy spectrum of electron antineutrinos released by the Yangjiang and Taishan nuclear power plants, which both lie 52.5 km away.

To do this, the facility has a 80 m high and 50 m diameter experimental hall located 700 m underground. Its main feature is a 35 m radius spherical neutrino detector, containing 20,000 tonnes of liquid scintillator. When an electron antineutrino occasionally bumps into a proton in the liquid, it triggers a reaction that results in two flashes of light that are detected by the 43,000 photomultiplier tubes that observe the scintillator.

On 18 November, a paper was submitted to the arXiv preprint server concluding that the detector’s key performance indicators fully meet or surpass design expectations.

New measurement

Neutrinos oscillate from one flavour to another as they travel near the speed of light, rarely interacting with matter. This oscillation is a result of each flavour being a combination of three neutrino mass states.

Yet scientists do not know the absolute masses of the three neutrinos but can measure neutrino oscillation parameters, known as θ12, θ23 and θ13, as well as the square of the mass differences (Δm2) between two different types of neutrinos.

A second JUNO paper submitted on 18 November used data collected between 26 August and 2 November to measure the solar neutrino oscillation parameter θ12 and Δm221 with a factor of 1.6 better precision than previous experiments.

Those earlier results, which used solar neutrinos instead of reactor antineutrinos, showed a 1.5 “sigma” discrepancy with the Standard Model of particle physics. The new JUNO measurements confirmed this difference, dubbed the solar neutrino tension, but further data will be needed to prove or disprove the finding.

“Achieving such precision within only two months of operation shows that JUNO is performing exactly as designed,” says Yifang Wang from the Institute of High Energy Physics of the Chinese Academy of Sciences, who is JUNO project manager and spokesperson. “With this level of accuracy, JUNO will soon determine the neutrino mass ordering, test the three-flavour oscillation framework, and search for new physics beyond it.”

JUNO, which is an international collaboration of more than 700 scientists from 75 institutions across 17 countries including China, France, Germany, Italy, Russia, Thailand, and the US, is the second neutrino experiment in China, after the Daya Bay Reactor Neutrino Experiment. It successfully measured a key neutrino oscillation parameter called θ13 in 2012 before being closed down in 2020.

JUNO is also one of three next-generation neutrino experiments, the other two being the Hyper-Kamiokande in Japan and the Deep Underground Neutrino Experiment in the US. Both are expected to become operational later this decade.

The post Scientists in China celebrate the completion of the underground JUNO neutrino observatory appeared first on Physics World.

New experiments at CERN by an international team have ruled out a potential source of intergalactic magnetic fields. The existence of such fields is invoked to explain why we do not observe secondary gamma rays originating from blazars.

Led by Charles Arrowsmith at the UK’s University of Oxford, the team suggests the absence of gamma rays could be the result of an unexplained phenomenon that took place in the early universe.

A blazar is an extraordinarily bright object with a supermassive black hole at its core. Some of the matter falling into the black hole is accelerated outwards in a pair of opposing jets, creating intense beams of radiation. If a blazar jet points towards Earth, we observe a bright source of light including high-energy teraelectronvolt gamma rays.

During their journey across intergalactic space, these gamma-ray photons will occasionally collide with the background starlight that permeates the universe. These collisions can create cascades of electrons and positrons that can then scatter off photons to create gamma rays in the gigaelectronvolt energy range. These gamma-rays should travel in the direction of the original jet, but this secondary radiation has never been detected.

Magnetic fields could be the reason for this dearth, as Arrowsmith explains: “The electrons and positrons in the pair cascade would be deflected by an intergalactic magnetic field, so if this is strong enough, we could expect these pairs to be steered away from the line of sight to the blazar, along with the reprocessed gigaelectronvolt gamma rays.” It is not clear, however, that such fields exist – and if they do, what could have created them.

Another explanation for the missing gamma rays involves the extremely sparse plasma that permeates intergalactic space. The beam of electron–positron pairs could interact with this plasma, generating magnetic fields that separate the pairs. Over millions of years of travel, this process could lead to beam–plasma instabilities that reduce the beam’s ability to create gigaelectronvolt gamma rays that are focused on Earth.

Oxford’s Gianluca Gregori explains, “We created an experimental platform at the HiRadMat facility at CERN to create electron–positron pairs and transport them through a metre-long ambient argon plasma, mimicking the interaction of pair cascades from blazars with the intergalactic medium”. Once the pairs had passed through the plasma, the team measured the degree to which they had been separated.

Called Fireball, the experiment found that the beams remained far more tightly focused than expected. “When these laboratory results are scaled up to the astrophysical system, they confirm that beam–plasma instabilities are not strong enough to explain the absence of the gigaelectronvolt gamma rays from blazars,” Arrowsmith explains. Unless the pair beam is perfectly collimated, or composed of pairs with exactly equal energies, instabilities were actively suppressed in the plasma.

While the experiment suggests that an intergalactic magnetic field remains the best explanation for the lack of gamma rays, the mystery is far from solved. Gregori explains, “The early universe is believed to be extremely uniform – but magnetic fields require electric currents, which in turn need gradients and inhomogeneities in the primordial plasma.” As a result, confirming the existence of such a field could point to new physics beyond the Standard Model, which may have dominated in the early universe.

More information could come with opening of the Cherenkov Telescope Array Observatory. This will comprise ground-based gamma-ray detectors planned across facilities in Spain and Chile, which will vastly improve on the resolutions of current-generation detectors.

The research is described in PNAS.

The post Accelerator experiment sheds light on missing blazar radiation appeared first on Physics World.

One thing I can say for sure that I got from working in academia is the ability to quickly read, summarize and internalize information from a bunch of sources. Journalism requires a lot of that. Being able to skim through papers – reading the abstract, reading the conclusion, picking the right bits from the middle and so on – that is a life skill.

In terms of other skills, I’m always considering who’s consuming what I’m doing rather than just thinking about how I’d like to say something. You have to think about how it’s going to be received – what’s the person on the street going to hear? Is this clear enough? If I were hearing this for the first time, would I understand it? Putting yourself in someone else’s shoes – be it the listener, reader or viewer – is a skill I employ every day.

The best thing is the variety. I ended up in this business and not in scientific research because of a desire for a greater breadth of experience. And boy, does this job have it. I get to talk to people around the world about what they’re up to, what they see, what it’s like, and how to understand it. And I think that makes me a much more informed person than I would be had I chosen to remain a scientist.

When I did research – and even when I was a science journalist – I thought “I don’t need to think about what’s going on in that part of the world so much because that’s not my area of expertise.” Now I have to, because I’m in this chair every day. I need to know about lots of stuff, and I like that feeling of being more informed.

I suppose what I like the least about my job is the relentlessness of it. It is a newsy time. It’s the flip side of being well informed, you’re forced to confront lots of bad things – the horrors that are going on in the world, the fact that in a lot of places the bad guys are winning.

When I started in science journalism, I wasn’t a journalist – I was a scientist pretending to be one. So I was always trying to show off what I already knew as a sort of badge of legitimacy. I would call some professor on a topic that I wasn’t an expert in yet just to have a chat to get up to speed, and I would spend a bunch of time showing off, rabbiting on about what papers I’d read and what I knew, just to feel like I belonged in the room or on that call. And it’s a waste of time. You have to swallow your ego and embrace the idea that you may sound like you don’t know stuff even if you do. You might sound dumber, but that’s okay – you’ll learn more and faster, and you’ll probably annoy people less.

In journalism in particular, you don’t want to preload the question with all of the things that you already know because then the person you’re speaking to can fill in those blanks – and they’re probably going to talk about things you didn’t know you didn’t know, and take your conversation in a different direction.

It’s one of the interesting things about science in general. If you go into a situation with experts, and are open and comfortable about not knowing it all, you’re showing that you understand that nobody can know everything and that science is a learning process.

The post Ask me anything: Jason Palmer – ‘Putting yourself in someone else’s shoes is a skill I employ every day’ appeared first on Physics World.

Retour en 2007, Apple sort sa sixième version de Mac OS X, Leopard.

Très rapidement les critiques fusent, trop de nouveautés assorties de trop de bugs récurrents.

Pour faire face au mécontentement général, Apple décide de faire une pause dans les nouvelles fonctions et sort deux ans plus tard Snow Leopard. Cette version n'apporte que peu de nouveautés. En revanche l'accent a été mis sur la correction des bugs et l'amélioration des performances.

Aux dernières nouvelles la société s'apprêterait à faire de même avec iOS 27. La mise à jour n'apportera que peu de nouveautés visibles. En échange, tout sera retravaillé afin d'optimiser les performances et la stabilité. Le but est de créer un socle stable pour le futur.

Nous ne vous cachons pas que c'est une excellente nouvelle qui demande à être confirmée. C'est d'autant plus vrai qu'il y a aujourd'hui un facteur capital qui s'ajoute, la sécurité.

En 2009, il y avait peu de pirates réellement intéressés à réaliser des piratages de masse sur les Mac. Aujourd'hui, toutes les mises à jour iOS s'accompagnent de mises à jour de sécurité multiples car les failles peuvent rapporter des fortunes. Faire une pause dans les nouveautés et revoir le code en profondeur devrait donc aussi permettre de rendre iOS plus robuste face aux attaques incessantes.

Il y a quelques semaines, Amazon a annoncé puis sorti sa nouvelle gamme d’appareils Echo. Aujourd’hui, nous découvrons ensemble l’Echo Dot Max.

Notre modèle du jour est d’ailleurs disponible en trois coloris : graphite (modèle que nous avons gentiment reçu), blanc et améthyste. Ils sont tous les trois disponibles au même prix, soit 109,99 € hors promotion.

Place au test !

Depuis quelques temps, la sobriété est au rendez-vous pour les emballages de produits signés Amazon, et on adore ça. Ici un retrouvera un bandeau bleu clair sur le haut du paquet où on retrouvera la mention Amazon sur l’avant, avec juste en dessous un visuel de l’appareil et son nom. Le tout est dans une boîte en carton recyclé et recyclable. À droite nous retrouverons à nouveau le nom du modèle et ses compatibilités.

À gauche, en plusieurs langues, nous découvrirons que notre Echo Dot max fonctionne à la fois avec Alexa et Alexa + et à l’arrière pour terminer, là aussi en plusieurs langues, on nous indiquera qu’il s’agit d’une enceinte connectée et Alexa, qui inclut un Echo Dot Max graphite et un adaptateur secteur. Bien sûr, on nous rappellera aussi que le Wi-Fi et l’application Amazon Alexa sont requis.

| Dimensions | 108,7 x 108,6 x 99,2 mm (l x H x P) |

| Poids | 505,3 g Le poids et la taille exacts peuvent varier selon le procédé de fabrication. |

| Bande passante audio | 53 Hz-16 kHz (volume 6) |

| Dimensions du haut-parleur | 1 tweeter 20 mm, 1 caisson de basses 63 mm |

| Canaux de lecture | Audio mono bidirectionnel |

| Technologie de traitement du signal audio | Audio haute définition sans perte, adaptation automatique à la pièce |

| Connectivité Wi-Fi | Wi-Fi 6E 11a/b/g/n/ac/ax 1×1, technologie Bluetooth sans fil/Bluetooth Low Energy 5.3 |

| Hub connecté intégré | Zigbee + Matter + Thread Border Router |

| Connectivité Bluetooth | Prise en charge du profil Advanced Audio Distribution Profile (A2DP) pour le streaming audio depuis votre appareil mobile vers Echo Dot Max ou depuis Echo Dot Max vers votre enceinte Bluetooth. Prise en charge du profil Audio/Video Remote Control Profile (AVRCP) pour le contrôle vocal des appareils mobiles connectés. Le contrôle vocal mains-libres n’est pas pris en charge pour les appareils Mac OS X. Les enceintes Bluetooth qui requièrent un code PIN ne sont pas prises en charge. |

| Processeur | AZ3 avec accélérateur d’IA |

| Capteurs | Capteur de température ambiante, capteur de lumière ambiante, détection de présence, accéléromètre (pour les gestes tactiles) |

| Système requis | Echo Dot Max est prêt à être connecté à votre Wi-Fi. L’application Alexa est compatible avec les appareils Fire OS, Android et iOS (voir les systèmes d’exploitation pris en charge). Certaines Skills et certains services peuvent nécessiter un abonnement ou d’autres frais. |

| Configuration requise | Amazon Wi-Fi simple setup permet aux clients de connecter en quelques étapes faciles des appareils connectés à leur réseau Wi-Fi. Wi-Fi simple setup est une autre façon pour Alexa de se perfectionner sans cesse. |

Quand Amazon a dévoilé sa nouvelle gamme Echo 2025, impossible de ne pas remarquer le Dot Max, présenté comme la petite enceinte qui veut jouer dans la cour des grandes. Chez Vonguru, on aime bien quand les constructeurs se montrent ambitieux, alors on a posé l’appareil sur un bureau, branché le câble, réveillé Alexa, et observé ce que cette petite boule prometteuse avait vraiment dans le ventre.

Dès le déballage, l’Echo Dot Max surprend. On retrouve la forme sphérique qui a fait le succès de la gamme, mais Amazon a introduit un côté plat où se logent les boutons de volume, le contrôle du micro et la fameuse LED signature. Ce détail change tout : l’enceinte paraît moins gadget, plus objet high-tech assumé. Le tissu tressé enveloppe proprement la coque, l’ensemble est solide, dense, presque premium dans l’esprit. On a envie de la poser dans un salon, une chambre ou même un bureau sans se dire qu’on gâche sa déco.

Mais ce n’est évidemment pas pour le design qu’on attendait ce modèle : c’est pour le son. Amazon promettait des basses jusqu’à trois fois plus profondes que sur un Echo Dot classique. Et effectivement, à la première écoute, on sent que l’enceinte vise un rendu nettement plus ample. Les basses se déploient avec un certain aplomb, donnant une vraie présence aux musiques chill, electro ou pop. Les médiums sont propres, les voix bien articulées, et les aigus montent sans agressivité. Alors oui, si l’on pousse le volume très haut, on peut sentir une petite saturation et noter que l’équilibre n’atteint pas le raffinement des très grosses enceintes du marché. Mais replacé dans son contexte — une mini enceinte connectée à un peu plus de 100 euros — le résultat est franchement bien. On peut remplir une pièce moyenne sans frustration, ce qui n’était pas toujours le cas des précédents modèles.

Côté intelligence, la puce AZ3 fait un travail remarquable. L’Echo Dot Max réagit plus vite, entend mieux, surtout en environnement bruyant. On peut parler depuis une autre pièce sans devoir hausser le ton, et Alexa capte l’instruction. Cela entraine cependant un problème si comme moi, vous disposez déjà d’autres Echo au sein de votre foyer. Ici, c’est toujours lui qui me répond alors que je parle plus souvent à mon Echo Show 15 présent dans la cuisine.

Les capteurs « Omnisense », intégrés pour rendre l’appareil plus « proactif », semblent apporter une logique plus fluide aux interactions, même si tout leur potentiel prendra son sens quand Alexa+ — la version boostée à l’IA — sera réellement disponible en France. Pour l’instant, l’attente reste de mise (et longue…), et c’est probablement le point le plus frustrant de ce produit : il donne envie d’un futur qui n’est pas encore totalement là.

L’Echo Dot Max s’impose aussi comme un petit hub domotique solide, compatible Zigbee, Matter et Thread. Il peut piloter des ampoules, des prises ou des capteurs sans qu’on doive investir dans un pont supplémentaire. C’est pratique, simple et très accessible pour ceux qui veulent monter un petit écosystème connecté.

Bien sûr, l’appareil n’est pas exempt de limites. L’équilibre sonore peut manquer d’un soupçon de finesse sur certains morceaux, et ceux qui pensent “home studio” devront plutôt regarder du côté de l’Echo Studio. En revanche, si l’on s’en tient à son usage principal — une enclave sonore sympa avec un assistant vocal réactif — l’Echo Dot Max coche largement les bonnes cases. Pour moi, dans un bureau ou dans une chambre, c’est l’emplacement idéal.

Pour conclure, cette enceinte donne clairement le sentiment d’être une évolution logique de ce qu’un Echo Dot doit être en 2025 : plus ambitieuse, plus puissante, mieux pensée, et surtout tournée vers l’avenir. Elle ne révolutionne pas le son, mais elle améliore suffisamment l’expérience pour la rendre immédiatement agréable au quotidien. Si vous cherchez une enceinte compacte qui fait bien plus que diffuser de la musique, et qui s’intègre parfaitement à un foyer déjà un peu connecté, l’Echo Dot Max mérite largement sa place dans votre intérieur.

Cependant, l’arrivée d’Alexa + se fait de plus en plus attendre et on a hâte de voir si tout le potentiel de cette nouveauté sera bel et bien utilisé. Affaire à suivre !

Pour rappel, notre modèle du jour est disponible en trois coloris : graphite (modèle que nous avons gentiment reçu), blanc et améthyste. Ils sont tous les trois disponibles au même prix, soit 109,99 € hors promotion.

Test – Echo Dot Max d’Amazon a lire sur Vonguru.

Le retour de la série Silent Hill f, et de Konami, était plutôt inespéré. Après des années de silence, la saga nous revient plus forte que jamais. Et si nous avons déjà pu tâter le remake de Silent Hill 2, nous vous proposons ce test de Silent Hill f qui fait sortir la saga de sa ville natale. Point de Silent Hill ici, mais une toute nouvelle bourgade, pas plus rassurante. Le titre vaut-il le coup ? Verdict dans les lignes suivantes !

Cela me fait un peu de peine de ne mettre « que » la note de 14/20 à ce test de Silent Hill f, mais ses erreurs lui coûtent cher. Et elles pèsent surtout finalement assez lourd dans la balance. Somptueux aussi bien artistiquement que techniquement, le titre de Konami en met plein les yeux. Mais il déçoit énormément sur le plan de ses énigmes, vraiment mauvaises. Ajoutez à cela des phases de combat trop longues, des niveaux trop longs et un scénario alambiqué pour pas grand chose et vous obtiendrez une expérience de jeu que l’on peut qualifier de bonne, mais non sans défauts.

Je retiendrai surtout la qualité d’immersion de ce Silent Hill f. Avec des décors grandioses, des combats de boss réussis et un aspect exploration urbaine passionnant, j’espère que ce titre aura droit à une suite qui corrigera les vilains défauts de jeunesse de ce premier tome ! Il y a clairement un immense potentiel !

L’article Test de Silent Hill f | De l’audace, mais des limaces dans la salade est apparu en premier sur PLAYERONE.TV.

Physicists working on the Antihydrogen Laser Physics Apparatus (ALPHA) experiment at CERN have trapped and accumulated 15,000 antihydrogen atoms in less than 7 h. This accumulation rate is more than 20 times the previous record. Large ensembles of antihydrogen could be used to search for tiny, unexpected differences between matter and antimatter – which if discovered could point to physics beyond the Standard Model.

According to the Standard Model every particle has an antimatter counterpart – or antiparticle. It also says that roughly equal amounts of matter and antimatter were created in the Big Bang. But, today there is much more matter than antimatter in the visible universe, and the reason for this “baryon asymmetry” is one of the most important mysteries of physics.

The Standard Model predicts the properties of antiparticles. An antiproton, for example, has the same mass as a proton and the opposite charge. The Standard Model also predicts how antiparticles interact with matter and antimatter. If physicists could find discrepancies between the measured and predicted properties of antimatter, it could help explain the baryon asymmetry and point to other new physics beyond the Standard Model.

Just as a hydrogen atom comprises a proton bound to an electron, an antihydrogen antiatom comprises an antiproton bound to an antielectron (positron). Antihydrogen offers physicists several powerful ways to probe antimatter at a fundamental level. Trapped antiatoms can be released in freefall to determine if they respond to gravity in the same way as atoms. Spectroscopy can be used to make precise measurements of how the electromagnetic force binds the antiproton and positron in antihydrogen with the aim of finding differences compared to hydrogen.

So far, antihydrogen’s gravitational and electromagnetic properties appear to be identical to hydrogen. However, these experiments were done using small numbers of antiatoms, and having access to much larger ensembles would improve the precision of such measurements and could reveal tiny discrepancies. However, creating and storing antihydrogen is very difficult.

Today, antihydrogen can only be made in significant quantities at CERN in Switzerland. There, a beam of protons is fired at a solid target, creating antiprotons that are then cooled and stored using electromagnetic fields. Meanwhile, positrons are gathered from the decay of radioactive nuclei and cooled and stored using electromagnetic fields. These antiprotons and positrons are then combined in a special electromagnetic trap to create antihydrogen.

This process works best when the antiprotons and positrons have very low kinetic energies (temperatures) when combined. If the energy is too high, many antiatoms will be escape the trap. So, it is crucial that the positrons and antiprotons to be as cold as possible.

Recently, ALPHA physicists have used a technique called sympathetic cooling on positrons, and in a new paper they describe their success. Sympathetic cooling has been used for several decades to cool atoms and ions. It originally involved mixing a hard-to-cool atomic species with atoms that are relatively easy to cool using lasers. Energy is transferred between the two species via the electromagnetic interaction, which chills the hard-to-cool atoms.

The ALPHA team used beryllium ions to sympathetically cool positrons to 10 K, which is five degrees colder than previously achieved using other techniques. These cold positrons boosted the efficiency of the creation and trapping of antihydrogen, allowing the team to accumulate 15,000 antihydrogen atoms in less than 7 h. This is more than a 20-fold improvement over their previous record of accumulating 2000 antiatoms in 24 h.

“These numbers would have been considered science fiction 10 years ago,” says ALPHA spokesperson Jeffrey Hangst, who is a Denmark’s Aarhus University.

Team member Maria Gonçalves, a PhD student at the UK’s Swansea University, says, “This result was the culmination of many years of hard work. The first successful attempt instantly improved the previous method by a factor of two, giving us 36 antihydrogen atoms”.

The effort was led by Niels Madsen of the UK’s Swansea University. He enthuses, “It’s more than a decade since I first realized that this was the way forward, so it’s incredibly gratifying to see the spectacular outcome that will lead to many new exciting measurements on antihydrogen”.

The cooling technique is described in Nature Communications.

The post Sympathetic cooling gives antihydrogen experiment a boost appeared first on Physics World.